Abhinav Kumar defends his PhD thesis

Introduction

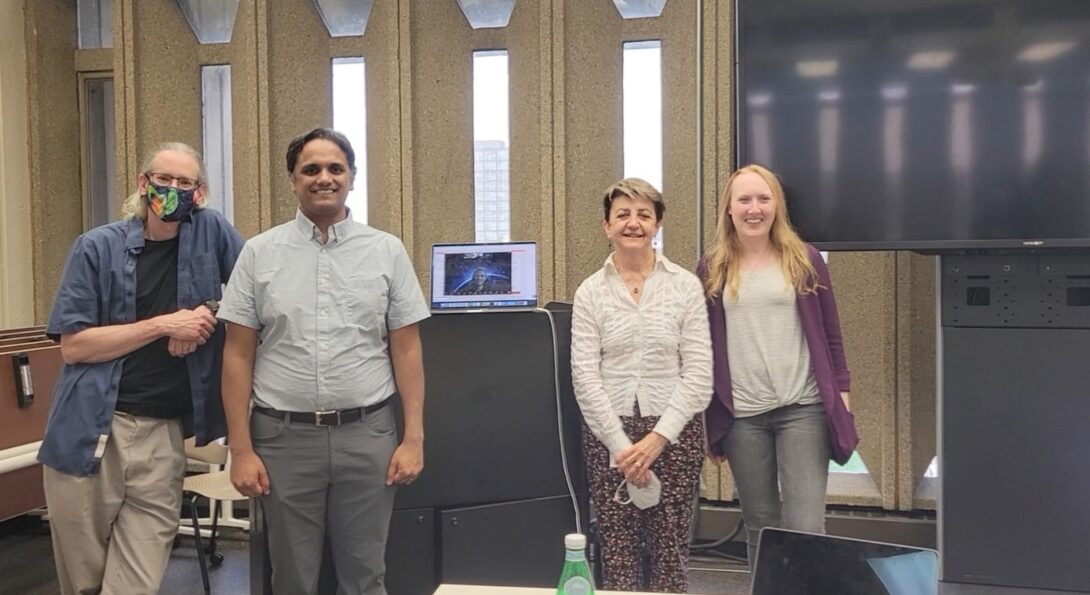

On May 16, 2022, Abhinav Kumar successfully defended his PhD thesis entitled: "Towards a Context-Aware Intelligent Assistant for Multimodal Exploratory Visualization Dialogue".

His committee included: Barbara Di Eugenio (advisor; CS, UIC), Andrew Johnson (CS, UIC), Natalie Parde (CS, UIC), Cornelia Caragea (CS, UIC), and Kallirroi Georgila (CS, University of Southern California).

Abstract:

Visualization is a core component of exploring data, providing a guided search through the data to gain meaningful insight and verify hypotheses. However it is also cognitively demanding to construct visualizations particularly for users that are novice to visualization. Such users tend to struggle translating hypotheses and relevant questions into data attributes, only select familiar plot types such as bar, line, and pie charts, and can misinterpret visualizations once they are created. Although visualization tools can alleviate these problems by performing some of the decision making for constructing the visualization, the software interface for these tools requires a steep learning curve. Rooted on the commercial success of natural language processing in technologies such as iPhone, Android, and Alexa, there has been an increased focus on building natural language interfaces to assist the user in data exploration. The broader goal of our research is towards developing a natural language interface implemented using dialogue system architecture capable of conversing with humans through language and hand pointing gestures.

In this thesis, we highlight our approach to modeling the dialogue. In particular, we built a multi-modal dialogue corpus for exploring data visualizations, capturing user speech and hand gestures during the conversation. As part of this work we also developed the natural language interface capable of processing speech and gesture using multimodal interfaces. Evaluation of our visualization system shows it is effective in interpreting speech and pointing gestures as well as generating appropriate visualizations with real subjects in a live environment. We also focused on advanced models for language processing. We modeled user intent by informing our language interpretation module of the nearby utterances with respect to the current request to give it context for the interpretation. We also addressed the small size of our corpus by developing a paraphrasing approach to augment its size, leading to improvement in predicting the user intent. Since the user can switch between request and thinking aloud we also modeled the ability to differentiate between them and used that to segment incoming utterances into the request and surrounding think aloud. Finally, we developed an approach to recognize visualization-referring language and pointing gestures and resolve them to the appropriate target visualization on the screen, as well as create new visualizations using the referred-to visualization as a template. We performed intrinsic evaluation of these individual models as well as an incremental evaluation of the end-to-end system, indicating effectiveness in predicting user intent as well as recognizing and resolving visualization-referring language and pointing gestures.